All solid-state LiDAR sensor that maps a full 180-degree field of view in 3D

A revolutionary technology in the world of sensor motion - All solid-state LiDAR sensor that sees 360 degrees

South Korean researchers have created an ultra-small, ultra-thin LiDAR device that divides a single laser beam into 10,000 points and covers an unprecedented 180-degree field of view. It has the ability to 3D depth-map an entire hemisphere of vision in a single shot.

Autonomous vehicles and robots must be able to perceive their surroundings extremely accurately if they are to be safe and useful in real-world scenarios. This requires a variety of different senses and some pretty extraordinary real-time data processing in humans and other autonomous biological entities, and it is likely that the same will be true for our technological offspring.

LiDAR (Light Detection and Ranging) has been around since the 1960s and is now a well-established range-finding technology that is especially useful in developing 3D point-cloud representations of a given space. Similar to sonar, LiDAR devices send out short pulses of laser light and then measure the light that is reflected or backscattered when those pulses strike an object.

The distance between the LiDAR unit and a given point in space is calculated by multiplying the time between the initial light pulse and the returned pulse by the speed of light and dividing by two.

One of the main issues with current LiDAR technology, according to researchers at South Korea's Pohang University of Science and Technology (POSTECH), is its field of view.

The only way to image a large area from a single point is to mechanically rotate your LiDAR device or rotate a mirror to direct the beam. This type of equipment can be heavy, power-hungry, and fragile. It tends to wear out quickly, and the rotational speed limits how frequently you can measure each point, lowering the frame rate of your 3D data.

The solid state LiDAR system, in contrast, have no physical moving parts.

According to the researchers, some of them project an array of dots all at once and look for distortion in the dots and patterns to discern shape and distance information. However, the field of view and resolution are limited, and the team claims that the devices are still relatively large.

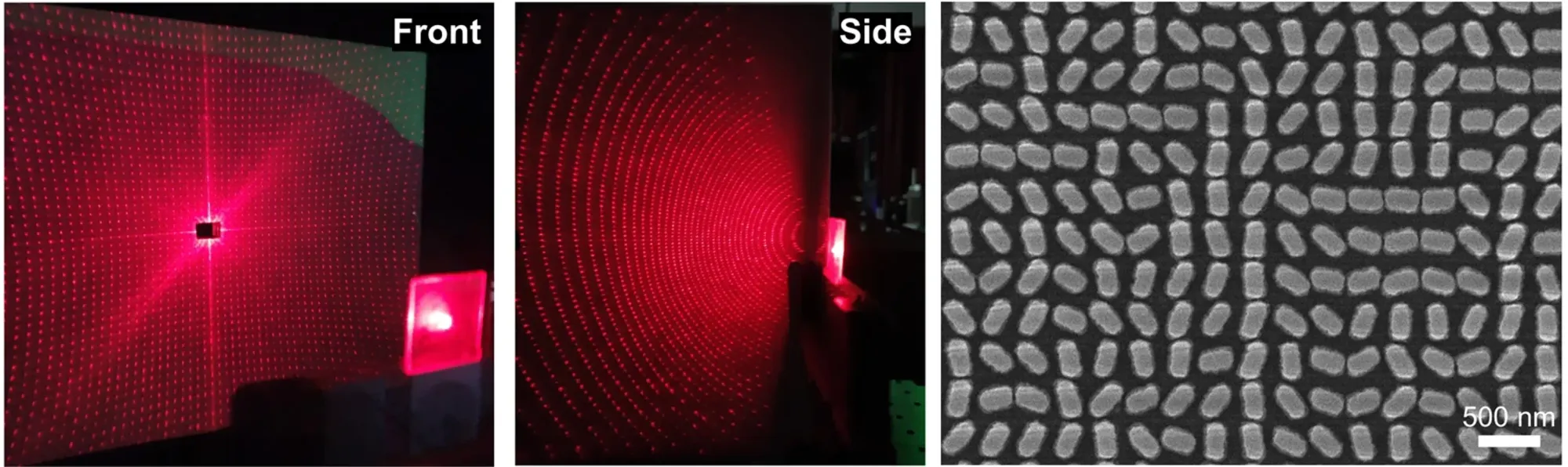

These two-dimensional nanostructures, one thousandth the width of a human hair, can be thought of as ultra-flat lenses made up of arrays of tiny and precisely shaped individual nanopillar elements.

As light passes through a metasurface, it is split into several directions, and with the right nanopillar array design, portions of that light can be diffracted to nearly 90 degrees. If you want, you can have a completely flat ultra-fisheye.

The researchers created a device that shoots laser light through a metasurface lens with nanopillars tuned to split it into approximately 10,000 dots, covering an extreme 180-degree field of view. The device then uses a camera to interpret the reflected or backscattered light to provide distance measurements.

"We have proved that we can control the propagation of light in all angles by developing a technology more advanced than the conventional metasurface devices," said Professor Junsuk Rho, co-author of a new study published in Nature Communications. "This will be an original technology that will enable an ultra-small and full-space 3D imaging sensor platform."

As diffraction angles become more extreme, light intensity decreases; a dot bent to a 10-degree angle reached its target at four to seven times the power of one bent out closer to 90 degrees.

They claim that higher-powered lasers and finer-tuned metasurfaces will expand the sweet spot of these sensors, but high resolution at greater distances will always be a challenge with ultra-wide lenses like these.

Image processing is another potential limitation here. The "coherent point drift" algorithm used to decode sensor data into a 3D point cloud is extremely complex, and processing time increases as the number of points increases. So high-resolution full-frame captures decoding 10,000 points or more will put a strain on processors, and getting such a system to run at 30 frames per second will be difficult.

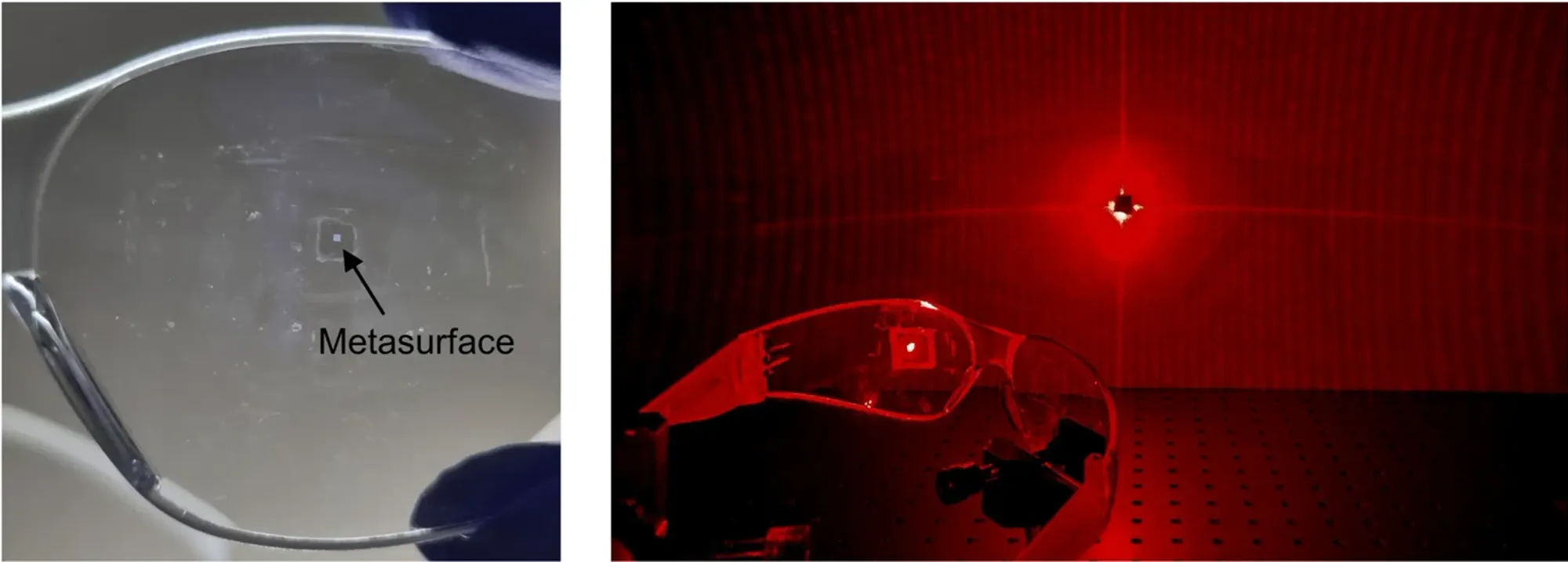

On the other hand, these things are incredibly small, and metasurfaces can be mass-produced easily and cheaply. One was printed onto the curved surface of a pair of safety glasses by the team. It's so small that you couldn't tell it apart from a speck of dust.

The team believes that these devices have enormous potential in areas such as mobile devices, robotics, self-driving cars, and VR/AR glasses.

Abstract

Structured light (SL)-based depth-sensing technology illuminates the objects with an array of dots, and backscattered light is monitored to extract three-dimensional information. Conventionally, diffractive optical elements have been used to form laser dot array, however, the field-of-view (FOV) and diffraction efficiency are limited due to their micron-scale pixel size. Here, we propose a metasurface-enhanced SL-based depth-sensing platform that scatters high-density ~10 K dot array over the 180° FOV by manipulating light at subwavelength-scale. As a proof-of-concept, we place face masks one on the beam axis and the other 50° apart from axis within distance of 1 m and estimate the depth information using a stereo matching algorithm. Furthermore, we demonstrate the replication of the metasurface using the nanoparticle-embedded-resin (nano-PER) imprinting method which enables high-throughput manufacturing of the metasurfaces on any arbitrary substrates. Such a full-space diffractive metasurface may afford ultra-compact depth perception platform for face recognition and automotive robot vision applications.

Read the whole research in the journal Nature Communications.

LiDAR INSIGHTER Newsletter

Join the newsletter to receive the latest updates in your inbox.